What is an LLM Model?

LLM stands for "Large Language Model." It is a type of artificial intelligence model that is designed to understand and generate human-like text based on the input it receives. Text data can come from various sources, such as books, news articles, social media posts, web pages, etc. These models are part of the broader field of Natural Language Processing (NLP), which focuses on enabling computers to understand, interpret, and generate human language.

LLM uses deep learning techniques to process and understand natural languages, such as English, Hindi, Chinese, etc. Natural language is the way humans communicate with each other, using words, sentences, and grammar. Processing and understanding natural language is a challenging task for computers because natural language is often ambiguous, inconsistent, and context-dependent. For example, the word “bank” can have different meanings depending on the situation: it can refer to a financial institution, a river shore, or an aircraft manoeuvre.

How do LLM Models Work?

LLMs use a type of neural network called a transformer, which excels at handling sequential data like language, which consists of two main components: an encoder and a decoder. The encoder processes the input text and converts it into a sequence of vectors that represent the meaning and context of each word. The decoder generates the output text by predicting the next word based on the previous words and the encoder’s vectors.

Did you know that the largest language model in the world has 175 billion parameters and can generate almost any type of text?

One of the key features of LLM models is that they are pre-trained and fine-tuned. Pre-training means that the model learns from a general and large corpus of text data, without any specific task or goal in mind. This way, the model can acquire a broad and rich knowledge of natural language. Fine-tuning means that the model is further trained on a smaller and more specific corpus of text data, with a particular task or goal in mind. This way, the model can adapt to the requirements and preferences of the task. For example, an LLM model can be fine-tuned to generate jokes, poems, stories, code, essays, songs, celebrity parodies, and more using its own words and knowledge.

Why Do LLM Models Exist?

LLM models exist for several reasons:

Natural Language Understanding: LLMs can understand and interpret human language, making them useful for a wide range of applications like chatbots, virtual assistants, and sentiment analysis.

Text Generation: They can generate human-like text, which is valuable for content creation, text completion, and even creative writing.

Language Translation: LLMs can translate text from one language to another with high accuracy, aiding in breaking down language barriers.

Information Retrieval: They help in extracting relevant information from large text datasets, making it easier to find specific information.

Summarization: LLMs can automatically summarize long articles or documents, saving time for readers.

Examples of LLM Models:

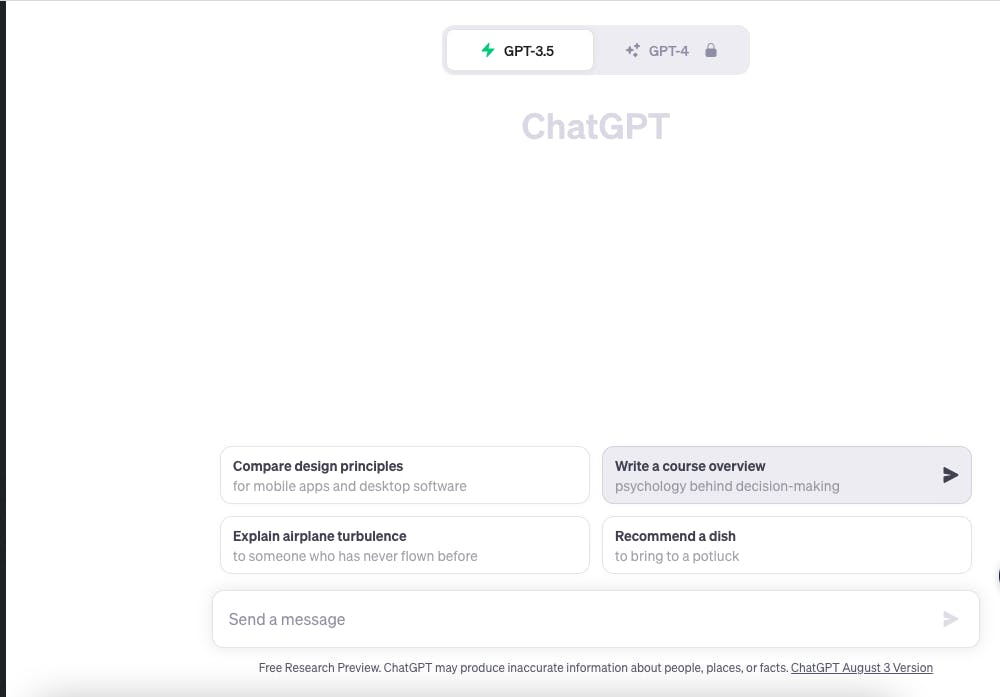

- GPT-3 (Generative Pre-trained Transformer 3): GPT-3 is one of the most famous LLMs, developed by OpenAI. It can complete sentences, and paragraphs, or even generate entire articles based on a given prompt. For example, you can give it a prompt like, "Write a short story about a detective," and it will generate a story.

BERT (Bidirectional Encoder Representations from Transformers): BERT is another well-known LLM. It's great at understanding the context of the text. For instance, it can answer questions like, "Who won the Nobel Prize in Literature in 2020?"

Google Research for BERT

T5 (Text-to-Text Transfer Transformer): T5 is designed to frame various NLP tasks as text-to-text tasks. For instance, for translation, you would input "Translate English to French: The weather is nice," and it would generate the translation.

Google Research for T5

RoBERTa (A Robustly Optimized BERT Pretraining Approach): RoBERTa is an improvement upon BERT, focusing on pre-training with more data and longer sequences, leading to better performance.

Possible Questions:

How do LLM models learn language? LLMs learn language by analyzing vast amounts of text data and identifying patterns in the language.

What are the applications of LLM models? LLMs are used in chatbots, content generation, translation, summarization (creating short versions of texts), classification (assigning labels to texts), question answering (providing answers based on texts), sentiment analysis (detecting emotions in texts), and more.

Are there any ethical concerns with LLM models? Yes, there are concerns about biases in training data, misuse of misinformation, and the potential for automating tasks that could lead to job displacement.

How do LLMs compare to traditional rule-based systems? LLMs are data-driven and can handle a wide range of language tasks, whereas rule-based systems rely on predefined rules and may be less flexible.

Here are some examples of what LLM models can do:

I bet you can’t guess what this text is about: “It was a dark and stormy night. The wind howled and the rain poured. A lone figure stood on the balcony, holding a device that looked like a magic wand. He pointed it at the sky and uttered a word. Suddenly, a bright flash of light illuminated the clouds, revealing a giant dragon.”

- Generate summaries:

Input: A long article about climate change.

Output: Climate change is a global phenomenon that affects the environment, society, economy, health, and security. It is caused by human activities that emit greenhouse gases into the atmosphere, which trap heat and warm up the planet. The consequences of climate change include rising sea levels, melting ice caps, extreme weather events, droughts, floods, wildfires, biodiversity loss, food insecurity, migration, conflicts, diseases, and deaths. To mitigate climate change, it is necessary to reduce greenhouse gas emissions, adopt renewable energy sources, improve energy efficiency, conserve natural resources, and adapt to changing conditions.

- Generate jokes:

Input: Tell me a joke about cats.

Output: What do you call a cat that likes to swim? A purr-maid.

- Generate stories:

Input: Write a short story about a dragon and a princess.

Output: Princess Lila was bored of her life in the castle. She longed for adventure and excitement, but all she had to do was attend boring lessons and parties. One day, she decided to sneak out of the castle and explore the nearby forest. She hoped to find some interesting animals or plants, or maybe even a fairy or a unicorn.

She wandered deeper and deeper into the forest until she came across a large cave. She was curious and decided to enter the cave. She lit a torch and walked inside. The cave was dark and damp, and she could hear strange noises echoing from within. She followed the noises until she reached a large chamber. There, she saw a huge dragon sleeping on a pile of gold and jewels. The dragon was red and scaly, with horns, spikes, wings, and a long tail. It breathed fire every time it snored.

Princess Lila was terrified but also fascinated. She had never seen such a magnificent creature before. She wanted to get closer and touch it, but she knew it was too dangerous. She decided to take a picture of it with her phone instead. She quietly took out her phone and snapped a photo of the dragon. However, she forgot to turn off the flash.

The flash startled the dragon and woke it up. It opened its eyes and saw the princess standing in front of it. It roared loudly and breathed fire at her. Princess Lila screamed and ran away, dropping her phone on the ground. The dragon chased after her, angry and hungry.

Princess Lila ran as fast as she could, but the dragon was faster. It caught up with her and grabbed her with its claws. It lifted her up in the air and brought her closer to its mouth. Princess Lila thought she was going to die.

However, before the dragon could eat her, it noticed something on her phone’s screen. It was the photo of itself that she had taken. The dragon was amazed by its own image. It had never seen itself before. It realized how beautiful and majestic it looked. It felt a surge of pride and vanity.

The dragon decided not to eat the princess, but to keep her as a pet instead. It thought that having a human pet would make it look even more impressive and powerful. It brought her back to its chamber and put her on its pile of gold and jewels. It told her that she was now its property and that she had to obey its every command.

Princess Lila was horrified by her situation. She wished she had never left the castle. She wondered if anyone would ever come to rescue her from the dragon’s clutches.

- Generate code:

Input: Write a Python function that takes two numbers as arguments and returns their sum.

Output: def add_numbers(a, b): # This function takes two numbers as arguments and returns their sum return a + b

In summary, LLM models are powerful tools in the field of natural language processing, capable of understanding, generating, and manipulating human language. They have diverse applications and are a key component of many AI-driven technologies that involve language understanding and generation.

“The limits of my language mean the limits of my world.” - Ludwig Wittgenstein

I hope this explanation has helped you understand what LLM models are and how they are used in generative AI. If you have any questions or feedback, please let me know. Thank you for your interest in this topic 😊

#LLM #LanguageModels